Artificial Intelligence Pedagogy: A Primer (Part I)

Key concepts & reflections associated with AI's purpose & role in Higher Education

As we begin preparing for the upcoming Fall 2024 semester, it’s time to review and reflect on the pedagogical foundations associated with integrating Artificial Intelligence (AI) into our classrooms.

While there may be disagreement over the approaches laid out below, they are worthy of attention.

Artificial Intelligence developers, as well as their models, are receiving a lot of warranted criticism - and that should not be overlooked if AI tools are being promoted in the Higher Education space. Students will benefit from real-world discussions addressing the complicated, evolving, and nuanced nature of Artificial Intelligence development, operation, and use.

We are still in the innovation phase of AI’s development, especially in light of the continued rapid and dramatic advancements in model functionality, so it’s difficult to predict the end game regarding AI’s overall impact on teaching and learning.

#1: Do we really have to discuss pedagogy because students are all on board, aren’t they?

The recent (May 2024) report “AI and Academia: Student Perspectives and Ethical Implications,” reveals growing concern about AI’s impact on learning. 43% of the 1,300+ college-bound high school students interviewed said they “believe using [AI] tools contributes to a significant decline in critical thinking and creativity”; 40% think that “using AI is a form of cheating” and that “AI tools contribute to misinformation.”

#2: Can I require my students use AI?

“Can I require my students use AI?” My response to that question is no, you can’t. The concerns about AI use are genuine and should be respected when promoting an inclusive and equitable learning environment.

Cost: students may not be able to afford (or want to pay for) upgraded access; students who can, get more functionality and an overall performance advantage - the last point is debatable!

Ethics: there are very serious and legitimate ethical grounds for not using AI: model secrecy, the misrepresentation of functionality, data collection and use, labor exploitation, natural resource and electrical consumption, limited regulation and oversight, intellectual property and copyrighted material theft, hallucinations, and bias

Technology: some students may not have access to AI-supporting infrastructure

Legality: AI terms of use require permission from parents or guardians of any user under 18. Using an AI permission form can address this (the shared document comes from North Carolina's "Generative AI Implementation Recommendations and Considerations for PK-13 Public Schools" publication: 01/16/24, Page 9)

Privacy: students may not want to share personal information with AI companies nor increase their digital footprint; AI can discern traits and characteristics of its users even without that info being specifically/intentionally shared

If you are motivated to require AI based upon the argument, “students need to develop AI skills," reconsider that "AI skills" don't really exist! The skillset needed to best use AI is fundamental: clear and concise communication, content evaluation, problem-solving, ethical awareness, an openness to learning new ideas, flexibility, adaptation, etc.

For a parallel discussion on technology’s impact on skill development/promotion, in the context of creativity in the music industry, watch Rick Beato’s “The Real Reason Why Music Is Getting Worse.”

#3: How can I determine and/or establish parameters or conditions for AI use in my classes?

If AI use is NOT ALLOWED:

Clearly articulate to students the justifications for prohibiting AI use

Restate course AI policy and AI use in the context of academic integrity

If AI use IS ALLOWED:

Clearly articulate to students the justifications for prohibiting AI use

Restate course AI policy and AI use in the context of academic integrity

Determine the acceptable levels of AI use (this most likely will differ according to task, assignment, or project)

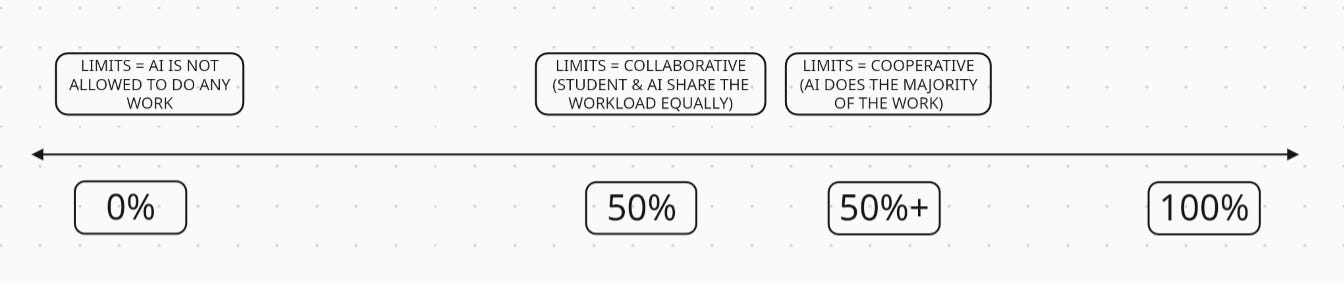

Collaborative = student and AI share the workload equally; the definition of “collaborate” is each participant contributes to the same amount of effort

Cooperative = AI does the majority of the work; the definition of “cooperate” is each participant does not contribute the same amount of effort

Arguably, determining contribution levels/percentages/amounts is not an exact (or easy) science, but a good rule of thumb is to ask yourself, “Am I having a student perform the detailed, complex, time-consuming task, or is this being passed on to AI?”

If AI use IS ALLOWED . . . it should not be REQUIRED:

Clearly articulate to students that they are not at a disadvantage (to avoid indirect pressure) nor penalized for not using AI.

An instructor needs to keep in mind that non-AI work will:

not be produced as quickly as AI work or in as great a quantity (or length)

not contain as much multi-media (AI can easily produce videos, animation, music, sounds, and images)

not be as dynamic (AI can generate mind maps, working computer programs, timelines, spreadsheets, etc.)

not appear as polished/refined

not come across as confidently or assured as AI work

not be as correct (in most cases) in terms of grammar, vocabulary, translation, or coding

If necessary, come up with rubrics (or additional rubric categories) for evaluating both non-AI enhanced/assisted work and AI-enhanced/assisted work

Share the rubrics with all students, regardless of AI choices

If AI is being used to EVALUATE student work:

Tell students which AI tool is being used

Explain to students why and how AI is being used to evaluate their work

Explain to students how their privacy is being maintained/how their data is being used via the tool. For instance, your institution has a contract with the AI developer (and you can share specific data and privacy provisions) or that material submitted to the AI model has had all Personally Identifiable Information (PII) removed

#4: What is an appropriate level of allowable AI limits?

All instructors have the right to determine the amount of AI use they are comfortable with!

To determine your comfort level, visualize AI’s contributions to student work along a spectrum - one that includes the definitions of collaborative and cooperative, with the former at 50% and the latter anything beyond that.

#5: How can I grasp the concepts of allowable AI limits?

For a more detailed visualization - and one that may help you gain an appreciation of AI’s relationship to key skills, tasks, approaches, etc. - think about a coordinate plane.

The vertical axis represents the range of AI-student interaction in the process of completing a step, task, project, etc. It can range from “Low AI interaction allowed/okay/preferred” to “High AI interaction allowed/okay/preferred.”

The horizontal axis represents the range of importance and independent mastery value associated with a skill or learning outcome. This range runs from a “non-demanding/non-essential skill” that requires process understanding but not independent mastery to a “demanding/essential skill” that requires both process understanding and independent mastery by a student.

Taking examples from History instruction . . . is it okay for students to explain the role of a thesis statement yet have AI draft one for them? Can students know the difference between primary and secondary sources - and be able to locate them - but have Artificial Intelligence summarize them? Given a student’s own notes, insights, and opinions, can AI write an essay or paper on their behalf - but only using those materials?

Figuring out where to place elements of your course in the vectors of both criteria will help you determine under which circumstances Artificial Intelligence can best assist and support student learning!